Tensors are powerful mathematical constructs that generalize scalars, vectors, and matrices into higher dimensions. They play a crucial role in data science, machine learning, and deep learning.

What is a Tensor?

- A scalar is a 0-dimensional tensor: $a$.

- A vector is a 1-dimensional tensor: $v = [v_1, v_2, \dots, v_n]$.

- A matrix is a 2-dimensional tensor:

$$A = \begin{bmatrix} a_{11} & a_{12} \\ a_{21} & a_{22} \end{bmatrix}$$

- A higher-order tensor extends to three or more dimensions: $T_{ijk}$, where $i$, $j$, and $k$ are indices over its dimensions:

$$A = \begin{bmatrix} \begin{bmatrix} a_{111} & a_{112} \\ a_{121} & a_{122} \end{bmatrix} & \begin{bmatrix} a_{211} & a_{212} \\ a_{221} & a_{222} \end{bmatrix} \end{bmatrix}$$

For example, a color image is represented as a 3D tensor of shape $(H, W, C)$, where $H$ is the height, $W$ is the width, and $C$ is the number of color channels (e.g., RGB).

Applications of Tensors in Data Science

- Deep Learning: Neural network computations heavily rely on tensors. For instance, inputs, weights, and activations are modeled as tensors during forward and backward propagation.

- Computer Vision: Images are stored as 3D tensors, where each pixel is encoded along multiple channels (e.g., red, green, and blue).

- Natural Language Processing: Word embeddings and sentence representations are modeled as tensors to capture contextual relationships.

Tensor Operations

- Addition: Two tensors of the same shape can be added element-wise. For example,

$$A + B = \begin{bmatrix} a_{11} + b_{11} & a_{12} + b_{12} \\ a_{21} + b_{21} & a_{22} + b_{22} \end{bmatrix}$$

- Dot Product: The dot product (scalar product) is calculated by multiplying corresponding components of two vectors and summing the results. For two vectors:

$u = [u_1, u_2, ..., u_n]$

$v = [v_1, v_2, ..., v_n]$

The dot product is given by:

$u \cdot v = \sum_{i=1}^n u_i v_i$

Where:

- $u_i$ and $v_i$ are the components of the vectors $u$ and $v$, respectively.

- $n$ is the number of dimensions.

The result is a scalar value, which represents the magnitude of projection of one vector onto the other. If $u \cdot v = 0$, the vectors are orthogonal.

- Tensor Product: The tensor product combines two tensors to form a higher-dimensional tensor. For example, given two vectors:

$u = [u_1, u_2, ..., u_m]$

$v = [v_1, v_2, ..., v_n]$

The tensor product $u \otimes v$ produces a matrix (a rank-2 tensor):

$$u \otimes v = \begin{bmatrix} u_1 v_1 & u_1 v_2 & \cdots & u_1 v_n \\ u_2 v_1 & u_2 v_2 & \cdots & u_2 v_n \\ \vdots & \vdots & \ddots & \vdots \\ u_m v_1 & u_m v_2 & \cdots & u_m v_n \end{bmatrix}$$

In general, the tensor product extends to higher dimensions and is denoted as:

$A \otimes B$

Where $A$ and $B$ are tensors of any rank. The resulting tensor has a rank equal to the sum of the ranks of $A$ and $B$. This operation is widely used in machine learning, physics, and deep learning for representing complex interactions.

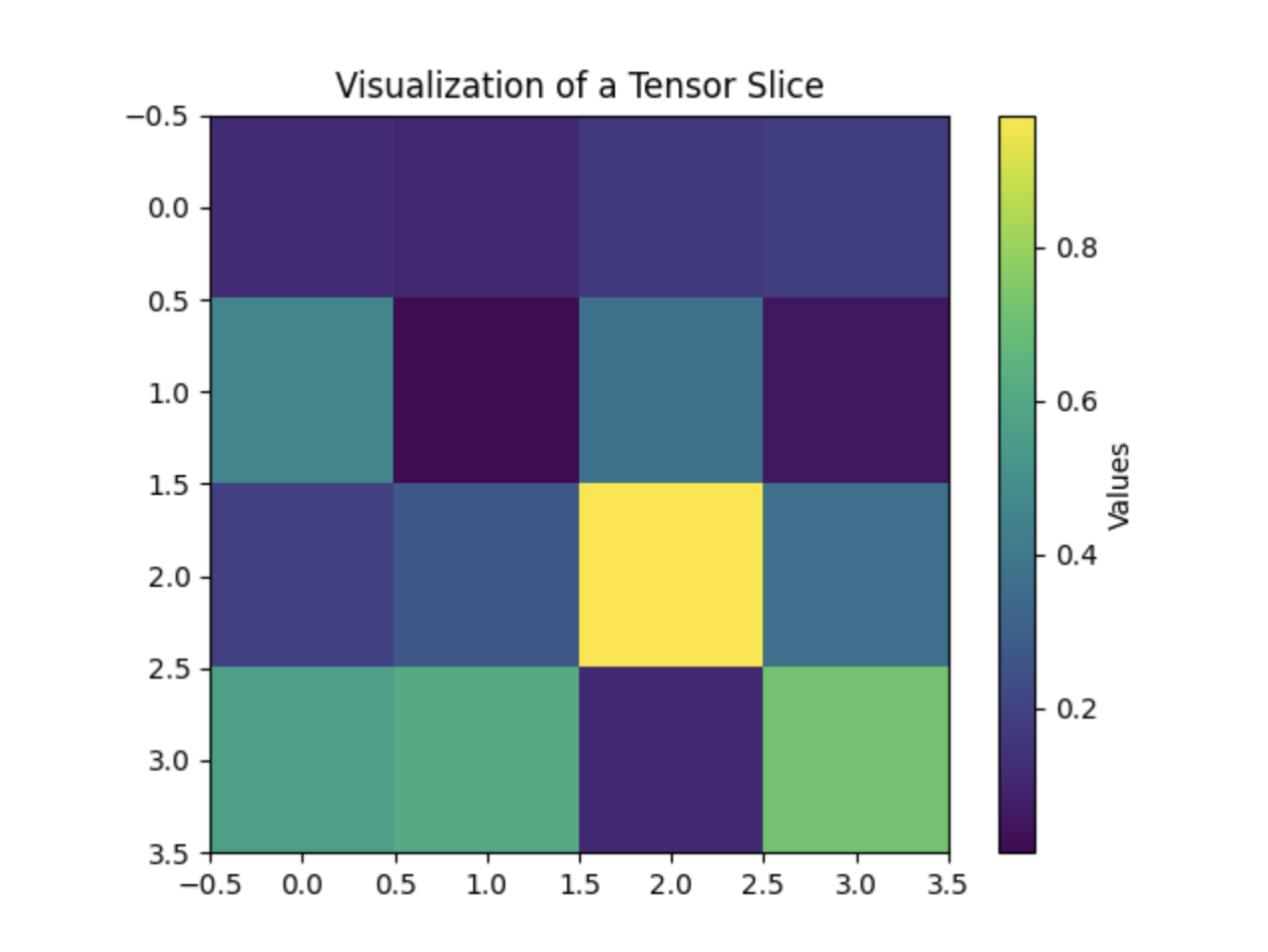

Visualization with Python

Here’s a Python example for creating a 3D tensor representing an RGB image:

import numpy as np

import matplotlib.pyplot as plt

# Create a 3D tensor

tensor = np.random.rand(4, 4, 4) # 4x4x4 tensor

# Visualize a slice

slice_ = tensor[:, :, 2] # Select the 3rd slice

plt.imshow(slice_, cmap='viridis')

plt.colorbar(label="Values")

plt.title("Visualization of a Tensor Slice")

plt.show()